Linux Today

Discover how Pangolin Reverse Proxy's new dual licensing model enhances flexibility with its Enterprise Edition, catering to diverse business needs.

The post Pangolin Reverse Proxy Moves to Dual Licensing With New Enterprise Edition appeared first on Linux Today.

Discover how Fedora is paving the way for AI tools while advocating for transparency and oversight in technology. Explore the future of responsible AI.

The post Fedora Opens the Door to AI Tools, Demands Disclosure and Oversight appeared first on Linux Today.

Discover the latest features of DietPi 9.18, now supporting NanoPi R3S, R76S, and M5. Enhance your lightweight OS experience today!

The post DietPi 9.18 Adds NanoPi R3S, R76S, and M5 Support appeared first on Linux Today.

Discover the exciting updates in SuperTuxKart 1.5! Explore major changes and enhancements in this open-source kart racing game. Join the fun today!

The post SuperTuxKart 1.5 Open-Source Kart Racing Game Released with Major Changes appeared first on Linux Today.

Discover the latest features of VirtualBox 7.2.4, now with initial support for Linux Kernel 6.18. Upgrade your virtualization experience today!

The post VirtualBox 7.2.4 Released with Initial Support for Linux Kernel 6.18 appeared first on Linux Today.

Discover Clonezilla Live 3.3.0-33, now featuring enhanced support for cloning MTD block and eMMC boot devices. Streamline your backup process today!

The post Clonezilla Live 3.3.0-33 Adds Support for Cloning MTD Block and eMMC Boot Devices appeared first on Linux Today.

Discover the latest Ubuntu 26.04 LTS "Resolute Raccoon" daily builds. Download now to experience cutting-edge features and improvements in your system.

The post Ubuntu 26.04 LTS “Resolute Raccoon” Daily Builds Are Now Available for Download appeared first on Linux Today.

Discover the best Linux distros for beginners in 2025 with our comprehensive guide. Find the perfect fit for your needs and start your Linux journey today!

The post The Complete Guide to Best Linux Distros for Beginners in 2025 appeared first on Linux Today.

Discover the step-by-step guide to dual boot Windows and Linux on the same SSD. Maximize your system's potential with this comprehensive tutorial.

The post How to Dual Boot Windows and Linux on the Same SSD appeared first on Linux Today.

Discover the Raspberry Pi 5 Desktop Mini PC, your ultimate solution for internet radio. Stream your favorite stations with ease and enjoy high-quality sound.

The post Raspberry Pi 5 Desktop Mini PC: Internet Radio appeared first on Linux Today.

eWEEK

Technology News, Tech Product Reviews, Research and Enterprise Analysis

Google launches Gemini 3.1 Pro with major gains in complex reasoning, multimodal capabilities, and benchmark-leading AI performance across key benchmarks.

The post Google Unveils Gemini 3.1 Pro, Touting a Leap in ‘Complex Problem-Solving’ appeared first on eWEEK.

Meta may revive its Malibu 2 smartwatch in 2026, pairing health tracking with a built-in Meta AI assistant as it expands its wearables lineup.

The post Meta’s ‘Malibu 2’ Smartwatch to Focus on Health Tracking, AI appeared first on eWEEK.

Meta has secured a patent for AI that could simulate a user’s social media activity after death, raising questions about consent, identity, and digital legacy.

The post Meta Patents AI That Could Keep Users Posting After Death appeared first on eWEEK.

Unitree plans to ship up to 20,000 humanoid robots in 2026 as China tightens its grip on the global robotics market, outpacing Western rivals.

The post China’s Unitree Aims to Ship 20,000 Humanoid Robots in 2026 appeared first on eWEEK.

Netflix sends cease-and-desist to ByteDance over Seedance AI video tool, alleging willful copyright infringement.

The post Netflix Calls ByteDance’s AI a ‘Piracy Engine’ in Escalating Copyright Showdown appeared first on eWEEK.

OpenAI’s GPT-5.3-Codex can exploit vulnerable crypto smart contracts 72% of the time, raising urgent questions about AI-powered cyber offense and defense.

The post OpenAI Just Showed That AI Can Drain a Crypto Wallet… on Purpose appeared first on eWEEK.

Google’s Gemini app rolls out Lyria 3 music generation in beta, turning text or photos into shareable 30-second tracks with automatic lyrics and cover art.

The post Google’s Lyria 3 Arrives in Gemini for Custom Music Creation appeared first on eWEEK.

Money talks.In AI, it also buys megawatts. Humain says it has poured $3 billion into Elon Musk’s xAI, a move that spotlights how the AI race is shifting from splashy launches to buildout math: capital, compute, power, and the places you can actually build. It’s also a rare case where a state-backed AI push shows […]

The post Saudi Arabia Invests $3B in Elon Musk’s xAI Empire appeared first on eWEEK.

OpenAI’s India push just got a serious power upgrade. In a new partnership with the Tata Group, OpenAI will anchor Tata Consultancy Services’ (TCS) HyperVault data center platform with 100 MW of AI-ready capacity, with an option to scale to 1 gigawatt over time, as reported by TechCrunch and the Times of India. OpenAI’s pitch […]

The post OpenAI’s Tata Tie-Up Puts 100 MW of AI Compute on the Table in India appeared first on eWEEK.

The European Parliament disabled built-in AI features on lawmakers’ work devices, citing unresolved cloud-processing security and privacy risks.

The post European Parliament Blocks AI on Lawmakers’ Devices Over Security Fears appeared first on eWEEK.

Network World

Microsoft has announced that it is partnering with chipmaker Nvidia and chip-designing software provider Synopsys to provide enterprises with foundry services and a new chip-design assistant. The announcement was made at the ongoing Microsoft Ignite conference.

The foundry services from Nvidia, which will deployed on Microsoft Azure, will combine three of Nvidia’s elements — its foundation models, its NeMo framework, and Nvidia’s DGX Cloud service.

While 95% of businesses are aware that AI will increase infrastructure workloads, only 17% have networks that are flexible enough to handle the complex requirements of AI. Given that disconnect, it’s too early to see widespread deployment of AI at scale, despite the hype.

That's one of the key takeaways from Cisco’s inaugural AI Readiness Index, a survey of 8,000 global companies aimed at measuring corporate interest in and ability to utilize AI technologies.

After months of speculation that Microsoft was developing its own semiconductors, the company at its annual Ignite conference Wednesday took the covers off two new custom chips, dubbed the Maia AI Accelerator and the Azure Cobalt CPU, which target generative AI and cloud computing workloads, respectively.

The new Maia 100 AI Accelerator, according to Microsoft, will power some of the company's heaviest internal AI workloads running on Azure, including OpenAI’s model training and inferencing workloads.

Intel kicked off the Supercomputing 2023 conference with a series of high performance computing (HPC) announcements, including a new Xeon line and Gaudi AI processor.

Intel will ship its fifth-generation Xeon Scalable Processor, codenamed Emerald Rapids, to OEM partners on December 14. Emerald Rapids features a maximum core count of 64 cores, up slightly from the 56-core fourth-gen Xeon.

In addition to more cores, Emerald Rapids will feature higher frequencies, hardware acceleration for FP16, and support 12 memory channels, including the new Intel-developed MCR memory that is considerably faster than standard DDR5 memory.

According to benchmarks that Intel provided, the top-of-the-line Emerald Rapids outperformed the top-of-the-line fourth gen CPU with a 1.4x gain in AI speech recognition and a 1.2x gain in the FFMPEG media transcode workload. All in all, Intel claims a 2x to 3x improvement in AI workloads, a 2.8x boost in memory throughput, and a 2.9x improvement in the DeepMD+LAMMPS AI inference workload.

A lack of cloud management skills could be limiting in-house innovation and the benefits enterprises gain from implementing public cloud exclusively, driving more IT organizations to invest in hybrid cloud environments, according to recent research.

In one survey, software vendor Parallels polled 805 IT professionals to learn more about how they use cloud resources. The responses showed that a technical skills gap continues to concern many organizations deploying cloud. Some 62% of survey respondents said they viewed the lack of cloud management skills at their organization as a “major roadblock for growth.” According to the results, 33% of respondents pointed to a lack of in-house expertise when trying to get maximum value from their cloud investment. Another 15% survey cited a difficulty finding the appropriate talent.

To get started as a Linux (or Unix) user, you need to have a good perspective on how Linux works and a handle on some of the most basic commands. This first post in a “getting started” series examines some of the first commands you need to be ready to use.

On logging in

When you first log into a Linux system and open a terminal window or log into a Linux system from another system using a tool like PuTTY, you’ll find yourself sitting in your home directory. Some of the commands you will probably want to try first will include these:

pwd -- shows you where you are in the file system right now (stands for “present working directory”) whoami – confirms the account you just logged into date -- show the current date and time hostname -- display the system’s name

Using the whoami command immediately after logging in might generate a “duh!” response since you just entered your assigned username and password. But, once you find yourself using more than one account, it’s always helpful to know a command that will remind you which you’re using at the moment.

Nvidia has announced a new AI computing platform called Nvidia HGX H200, a turbocharged version of the company’s Nvidia Hopper architecture powered by its latest GPU offering, the Nvidia H200 Tensor Core.

The company also is teaming up with HPE to offer a supercomputing system, built on the Nvidia Grace Hopper GH200 Superchips, specifically designed for generative AI training.

A surge in enterprise interest in AI has fueled demand for Nvidia GPUs to handle generative AI and high-performance computing workloads. Its latest GPU, the Nvidia H200, is the first to offer HBM3e, high bandwidth memory that is 50% faster than current HBM3, allowing for the delivery of 141GB of memory at 4.8 terabytes per second, providing double the capacity and 2.4 times more bandwidth than its predecessor, the Nvidia A100.

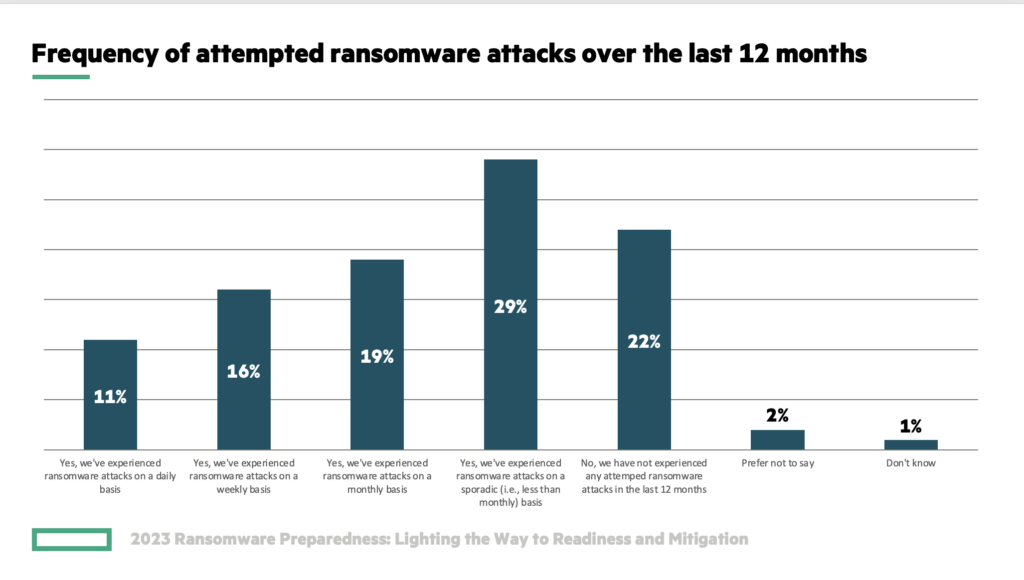

Ransomware is a growing threat to organizations, according to research independently conducted by Enterprise Strategy Group and sponsored by Zerto, a Hewlett Packard Enterprise company.

According to the report, 2023 Ransomware Preparedness: Lighting the Way to Readiness and Mitigation, 75% of organizations experienced ransomware attacks in the last 12 months, with10% facing daily attacks.[i]

46% of organizations experienced ransomware attacks at least monthly—with 11% reporting daily attacks.

Cisco is taking a collaborative approach to helping enterprise customers build AI infrastructures.

At its recent partner summit, Cisco talked up a variety of new programs and partnerships aimed at helping enterprises get their core infrastructure ready for AI workloads and applications.

“While AI is driving a lot of changes in technology, we believe that it should not require a wholesale rethink of customer data center operations,” said Todd Brannon, senior director, cloud infrastructure marketing, with Cisco’s cloud infrastructure and software group.

LiquidStack, one of the first major players in the immersion cooling business, has entered the single-phase liquid cooling market with an expansion of its DataTank product portfolio.

Immersion cooling is the process of dunking the motherboard in a nonconductive liquid to cool it. It's primarily centered around the CPU but, in this case, involves the entire motherboard, including the memory and other chips.

Immersion cooling has been around for a while but has been something of a fringe technology. With server technology growing hotter and denser, immersion has begun to creep into the mainstream.

Frontier maintained its top spot in the latest edition of the TOP500 for the fourth consecutive time and is still the only exascale machine on the list of the world's most powerful supercomputers. Newcomer Aurora debuted at No. 2 in the ranking, and it’s expected to surpass Frontier once the system is fully built.

Frontier, housed at the Oak Ridge National Laboratory (ORNL) in Tenn., landed the top spot with an HPL score of 1.194 quintillion floating point operations per second (FLOPS), which is the same score from earlier this year. A quintillion is 1018 or one exaFLOPS (EFLOPS). The speed measurement used in evaluating the computers is the High Performance Linpack (HPL) benchmark, which measures how well systems solve a dense system of linear equations.

As digital landscapes evolve, so does the definition of network performance. It's no longer just about metrics; it's about the human behind the screen. Businesses are recognizing the need to zoom in on the actual experiences of end-users. This emphasis has given rise to advanced tools that delve deeper, capturing the essence of user interactions and painting a clearer picture of network health.

The rise of End-User Experience (EUE) scoring

End-User Experience (EUE) Scoring has emerged as a game-changer in the realm of network monitoring. Rather than solely relying on traditional metrics like latency or bandwidth, EUE scoring provides a holistic measure of how a user perceives the performance of a network or application. By consolidating various key performance indicators into a single, comprehensible metric, businesses can gain actionable insights into the true quality of their digital services, ensuring that their users' experiences are nothing short of exceptional.

Chipmaker Nvidia is reportedly working on producing three new graphics processing units (GPUs) for sale in China in order to circumvent the new export restrictions imposed by the Biden administration.

The new chips — namely the H20, L20, and L2 — could go on sale in China from November 16 after the new US restrictions come into force, according to a news report from Chinastarmarket, which cites sources in the industrial supply chain put in place by Nvidia to manufacture these new GPUs.

The Japanese government has allocated an extra $13.3 billion (two trillion yen) to boost its domestic semiconductor industry.

The funding is expected to be split between manufacturing and R&D, according to The Japan Times, which reported that the majority of the money will likely go to supporting TSMC and Rapidus. About $376 million (570 billion yen) will be assigned to a separate fund to enhance the stable supply of chips to Japan, the report said.

The decision comes at the end of a year where both government subsidies and company investments and innovations have sought to significantly bolster the country’s chip manufacturing abilities and place it at the cutting edge of semiconductor technology.

VMware has two new corporate partners, in the form of Intel and IBM, for its private AI initiative. The partnerships will provide new architectures for users looking to keep their generative AI training data in-house, as well as new capabilities and features.

Private AI, as VMware describes it, is essentially a generative AI framework designed to let customers use the data in their own enterprise environments — whether public cloud, private cloud or on-premises — for large language model (LLM) training purposes, without having to hand off data to third parties. VMware broke ground on the project publicly in August, when it rolled out VMware Private AI Foundation with NVIDIA. That platform combines the VMware Cloud Foundation multicloud architecture with Nvidia AI Enterprise software, offering systems built by Dell, HPE and Lenovo to provide on-premises AI capabilities.

It’s barely fall of 2023, but it’s already clear that CIOs aren’t particularly positive about their network plans for 2024. Of 83 I have input from, in fact, 59 say they expect “significant issues” in their network planning for next year, and 71 say that they’ll be “under more pressure” in 2024 than they were this year. Sure, CIOs have a high-pressure job, but their expectations for 2024 are worse than for any year in the past 20 years, other than during Covid. Nobody is saying it’s a “the sky is falling” crisis like the proverbial Chicken Little, but some might be hunching their shoulders just a little.

It seems that in 2023, all the certainties CIOs had identified in their network planning up to now are being called into question. That isn’t limited to networking, either. In fact, 82 of 83 said their cloud spending is under review, and 78 said that their data center and software plans are also in flux. In fact, CIOs said their network pressures are due more to new issues relating to the cloud, the data center, and software overall than to any network-specific challenges. Given all of this, it’s probably not surprising that CIOs say they spend less time on pure networking topics than at any time in the last 20 years.

IT leaders are investing in observability technologies that can help them gain greater visibility beyond internal networks and build more resilient environments, according to recent research from Splunk.

Splunk, which Cisco announced it would acquire for $28 billion, surveyed 1,750 observability practitioners to gauge investment and deployment of observability products as well as commitment to observability projects within their IT environments. According to the vendor’s State of Observability 2023 report, 87% of respondents now employ specialists who work exclusively on observability projects.

When evaluating the design of your backup systems or developing a design of a new backup and recovery system, there are arguably only two metrics that matter: how fast you can recover, and how much data you will lose when you recover. If you build your design around the agreed-upon numbers for these two metrics, and then repeatedly test that you are able to meet those metrics in a recovery, you’ll be in good shape.

The problem is that few people know what these metrics are for their organization. This isn’t a matter of ignorance, though. They don’t know what they are because no one ever created the metrics in the first place. And if you don’t have agreed upon metrics (also known as service levels), every recovery will be a failure because it will be judged against the unrealistic metrics in everyone’s heads. With the exception of those who are intimately familiar with the backup and disaster recovery system, most people have no idea how long recoveries actually take.

The UK government has revealed technical and funding details for what will be one of the world’s fastest AI supercomputers, to be housed at the University of Bristol — and one of three new supercomputers slated to go online in the country over the next few years.

Dubbed Isambard-AI, the new machine, first announced in September, will be built with HPE’s Cray EX supercomputers and powered by 5,448 NVIDIA GH200 Grace Hopper Superchips. The chips, which were launched by Nivida earlier this year, provide three times as much memory as the chipmaker’s current edge AI GPU, the H100, and 21 exaflops of AI performance.

The reliability of services delivered by ISPs, cloud providers and conferencing services (such as unified communications-as-a-service) is critical for enterprise organizations. ThousandEyes monitors how providers are handling any performance challenges and provides Network World with a weekly roundup of interesting events that impact service delivery. Read on to see the latest analysis, and stop back next week for another update. Additional details available here.

Internet report for October 23-29

ThousandEyes reported 221 global network outage events across ISPs, cloud service provider networks, collaboration app networks and edge networks (including DNS, content delivery networks, and security as a service) during the week of October 23-29. That’s up from 163 the week prior, an increase of 36%. Specific to the U.S., there were 103 outages. That's up from 75 outages the week prior, an increase of 37%. Here’s a breakdown by category:

Linux’s compgen command is not actually a Linux command. In other words, it’s not implemented as an executable file, but is instead a bash builtin. That means that it’s part of the bash executable. So, if you were to type “which compgen”, your shell would run through all of the locations included in your $PATH variable, but it just wouldn’t find it.

$ which compgen /usr/bin/which: no compgen in (.:/home/shs/.local/bin:/home/shs/bin:/usr/local/bin: /usr/bin:/usr/local/sbin:/usr/sbin)

Obviously, the which command had no luck in finding it.

If, on the other hand, you type “man compgen”, you’ll end up looking at the man page for the bash shell. From that man page, you can scroll down to this explanation if you’re patient enough to look for it.

Network management and visualization vendor Splunk, which is set to be acquired by Cisco in a $28 billion deal, will cut about 560 jobs in a global restructuring, the company announced Wednesday in an SEC filing.

Splunk president and CEO Gary Steele said in the filing that employees in the Americas set to lose their jobs will be notified throughout today, and that the company plans to offer severance packages to laid-off employees, as well as healthcare coverage and job placement assistance for an undisclosed length of time.

The data circulatory system

Data is the lifeblood of enterprise, flowing vital information through a network of IT veins and arteries to deliver resources exactly where they are needed. Like how innovations in medicine and nutrition have changed the way we live, advanced technologies like IoT, AI, machine learning, and increasingly sophisticated applications are necessitating change in where and how data lives and is processed.

Cisco is teaming with Aviz Networks to offer an enterprise-grade SONiC offering for large customers interested in deploying the open-source network operating system.

Under the partnership, Cisco’s 8000 series routers will be available with Aviz Networks’ SONiC management software and 24/7 support. The support aspect of the agreement may be the most significant portion of the partnership, as both companies attempt to assuage customers’ anxiety about supporting an open-source NOS.

While SONiC (Software for Open Networking in the Cloud) is starting to attract the attention of some large enterprises, deployments today are still mainly seen in the largest hyperscalers. With this announcement, Cisco and Aviz are making SONiC more viable for smaller cloud providers, service providers, and those very large enterprises that own and operate their own data centers, said Kevin Wollenweber, senior vice president and general manager with Cisco networking, data center and provider connectivity.

The rush to embrace artificial intelligence, particularly generative AI, is going to drive hyperscale data-center providers like Google and Amazon to nearly triple their capacity over the next six years.

That’s the conclusion from Synergy Research Group, which follows the data center market. In a new report, Synergy notes that while there are many exaggerated claims around AI, there is no doubt that generative AI is having a tremendous impact on IT markets.

Increased network complexity, constant security challenges, and talent shortages are driving enterprises to depend more on channel business partners, including managed service providers, system integrators, resellers and other tech providers.

Greater use of partners by enterprises is expected to continue over the next few years, experts say. IDC in its research on the future of industry ecosystems found that by the end of 2023, almost 60% of organizations surveyed will have expanded the number of partners they work with outside of their core industry.

Public cloud migration long ago wrested control over digital infrastructure from network and security teams, but now is the time for those groups to retake the initiative. Cloud operations and DevOps groups will never cede ground, but they will welcome self-service networking and security solutions that provide guardrails that protect them from disaster. Cooperation between traditional infrastructure teams and cloud teams is even more important as enterprises embrace multi-cloud architecture, where complexity and risk are increasing. In fact, my research has found that security risk, collaboration problems, and complexity are the top pain points associated with multi-cloud networking today.

A few months ago, Arm Holdings introduced the Neoverse Complete Subsystem (CSS), designed to accelerate development of Neoverse-based systems. Now it has launched Arm Total Design, a series of tools and services to help accelerate development of Neoverse CSS designs.

Partners within the Arm Total Design ecosystem gain preferential access to Neoverse CSS, which can enable them to reduce time to market and lower the costs associated with building custom silicon. This ecosystem covers all stages of silicon development. It aims to make specialized solutions based on Arm Neoverse widely available across various infrastructure domains, such as AI, cloud, networking, and edge computing.

Gear Latest

Channel Description